Customer story

Fiber AI uses PeerDB for Postgres to ClickHouse replication at Scale

Fiber AI automates prospecting & outbound marketing for enterprises. They recently released a feature that enables their customers to seamlessly search through tens of millions of companies, filtering on specific attributes such as funding size and employee count. Customers can join the target accounts with a prospect database of hundreds of millions of global people (billions of rows) to conduct additional filters on job titles, years of experience, full-text search across profiles, location, etc.

Fiber AI Needed a Turnkey and a Reliable Way to Replicate Terabytes of Data from Postgres to ClickHouse

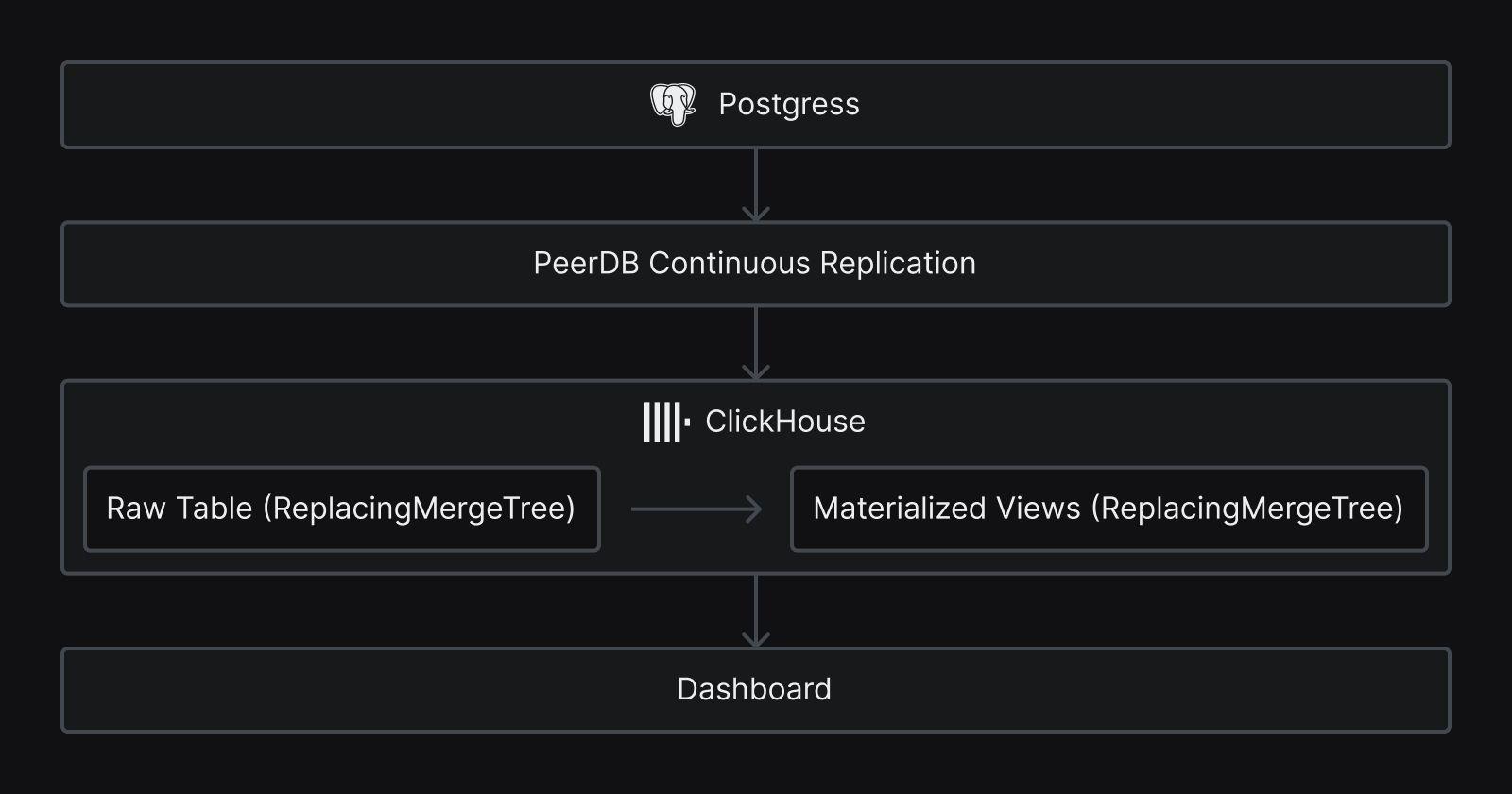

To support the above feature, they required a database capable of searching through billions of rows and delivering sub-second performance. To power these capabilities, Fiber AI selected ClickHouse. However, all the prospecting data was indexed in a multi-terabyte Postgres database. They needed a reliable method to replicate this data from Postgres to ClickHouse every 15 minutes.

Reliably replicating terabytes of data in near real-time from Postgres to ClickHouse is challenging due to the sheer volume of data and the architectural differences between the databases. A few options included Debezium, which is not turnkey, as it requires setting up and managing multiple components, including a source connector and a sink connector. The other option was using a generalized ETL tool not designed specifically for Postgres to ClickHouse replication, which might not handle large data volumes well.

These options didn’t meet Fiber AI’s needs. They were looking for a tool that was both turnkey and native, with the ability to handle large-scale replication from Postgres to ClickHouse.

Fiber AI chose PeerDB for its Simplicity and Ability to Scale

Fiber AI came across PeerDB, which precisely addressed their needs: a replication tool that is simple to use and can handle any scale.

At PeerDB, we are building a fast, simple and a reliable way to replicate data from Postgres to Data Warehouses / Analytical Stores. ClickHouse, Snowflake, and BigQuery are among the supported connectors. Unlike other generalized ETL tools, our focus is on quality rather than the breadth of connectors. We implement native optimizations for both Postgres and ClickHouse to ensure a fast and a reliable replication experience. A few of them include:

- Moving Terabytes in a few hours instead of days using Parallel Snapshotting

- De-risking the source Postgres database by Continuous consumption and flushing of the replication slot.

- Efficient Ingestion Into ClickHouse by using compressed data formats (avro) along with staging on S3.

PeerDB is very easy to use. Users simply need to connect their Postgres and ClickHouse databases as peers and create a mirror to start replicating data within minutes.

With all these scalability, reliability, and ease-of-use capabilities, Fiber AI decided to choose PeerDB.

“Thankful we found PeerDB to migrate our Postgres instance to Clickhouse - their product works incredibly quickly and is very reliable!! The team has been very helpful in optimizing our workloads and advising on best practices - massive value add and time savings, highly recommended!” -Aditya Agashe, CEO of Fiber AI

Fiber AI migrated to PeerDB within a couple of days

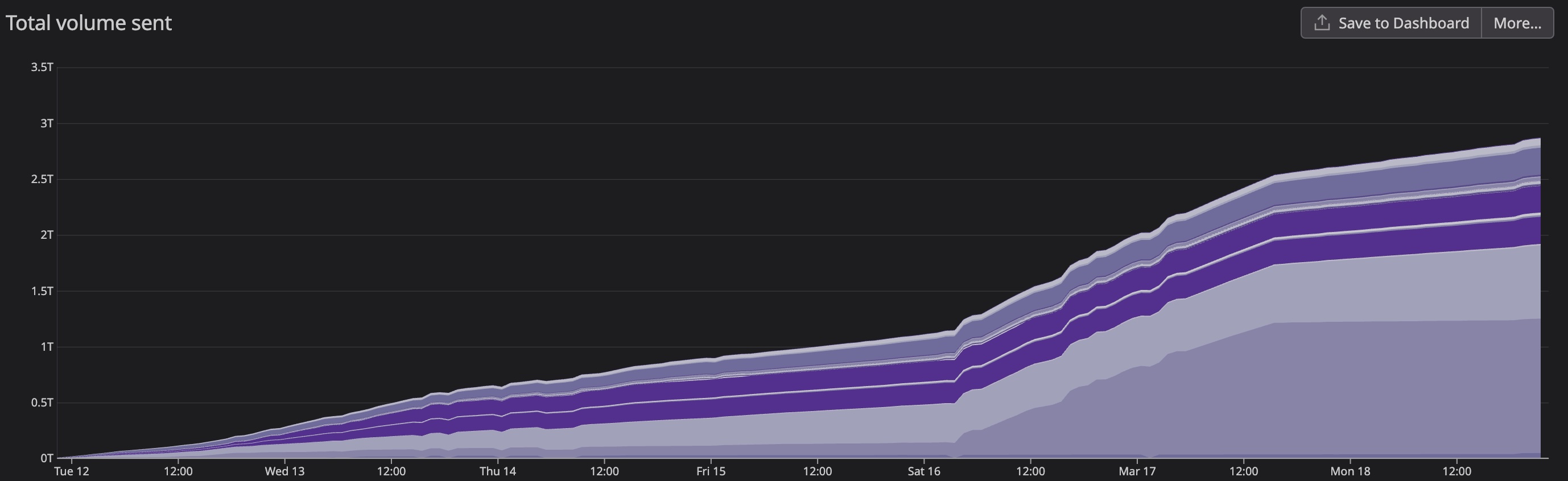

Following the release of PeerDB’s native ClickHouse connector, Fiber AI tested PeerDB. Within a couple of days, they were able to cut-over to production and provide a critical search feature to their enterprise customers. PeerDB helped move 3TB of data from Postgres to ClickHouse in less than a day and started replicating all future updates to data, using Change Data Capture (CDC).

“We’re using this connector already for our Postgres to ClickHouse ETL and it’s insanely fast and accurate! Can’t believe how well this works. The PeerDB team has been super helpful in getting us set up, helping us debug, and advising us on everything related to ClickHouse and Postgres. Great work guys!!” -Neel Mehta, CTO of Fiber AI

Some Numbers

Just to give some idea on the amount of data that PeerDB handles in this use-case, below are a few numbers:

Scale

Metric

Data Size during Initial Load

3 TB

Number of rows moved per month

1 Billion

GBs of data per month

3TB (incl. INSERT, UPDATEs and DELETEs)

Try out PeerDB

We hope you enjoyed reading this customer story. If you have a use case similar to Fiber AI and want to use PeerDB to replicate data from Postgres to ClickHouse:

About

Fiber AI automates prospecting & outbound marketing for enterprises.

Use case

Sales and Marketing Automation, Real-time Search, Real-time Analytics

Solutions

Postgres to ClickHouse CDC